This logic is again designed to ensure high interoperability between the components.

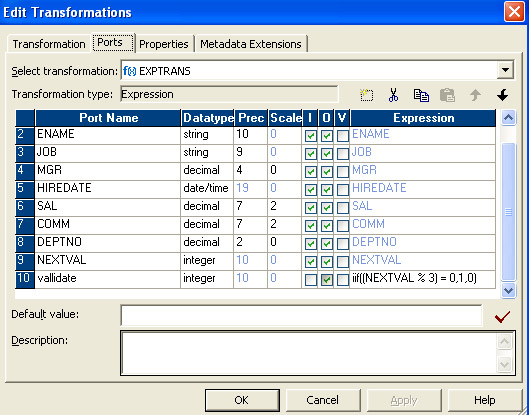

Read the general type INTEGER and choose a corresponding type for Snowflake, which happens to be also INTEGER. When the Snowflake writer consumes this value, it will May store the value BIGINT as a type of a column that type maps to the INTEGER general type. This means that, for example, a MySQL extractor STRING, INTEGER, NUMERIC, FLOAT, BOOLEAN,ĭATE, and TIMESTAMP. Base Typesĭata types from a source are mapped to a destination using a Base Type. The basic idea behind this is that a text type has the best interoperability, so this averts many issues (e.g., someĭate values stored in a MySQL database might not be accepted by a Snowflake database and vice-versa). The non-text column type is used only during a component (transformation or writer) execution. The table metadata to pre-fill the table columns configuration for you.Įven if a data type is available for a column, that column is always stored as text - keep this in mindĮspecially in Transformations, where the output is always cast to text. Also, some writers, e.g., the Snowflake writer use ForĮxample, the transformation COPY mapping allows you to set data types for the tables inside

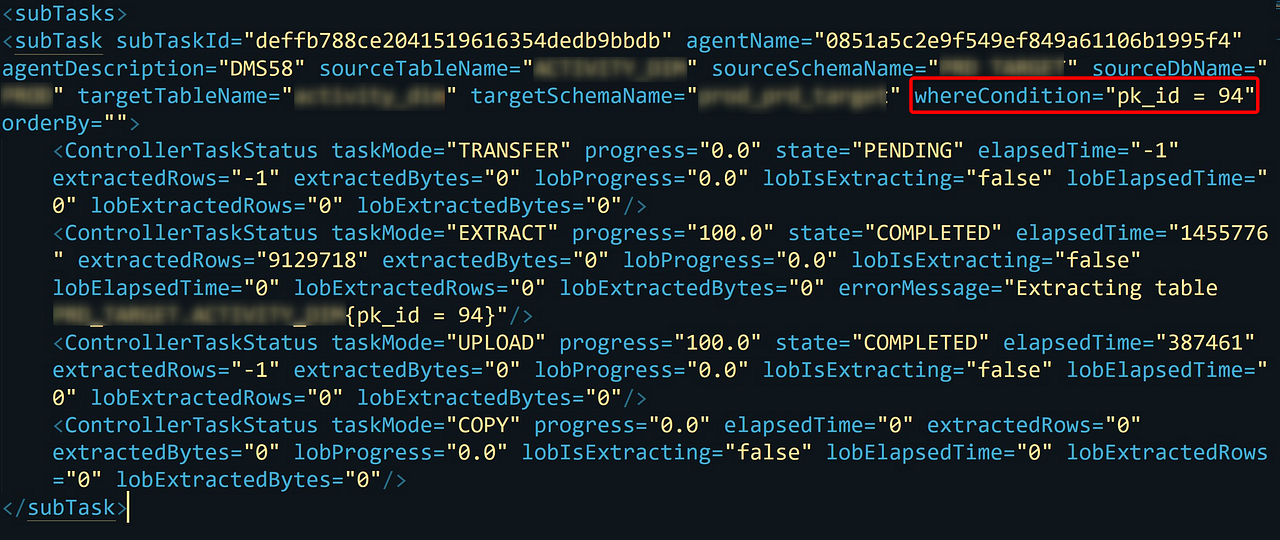

These are stored with each table column and can be used later on when working with the table. Loads a table from the source database, it also records the physical column types from that table. Some components (especially extractors) store metadata about the table columns.

0 kommentar(er)

0 kommentar(er)